- Equal width binning

- Predefined number of cut points per dimension (m)

- Number of dimensions (n)

- For each sequence node, there are (m+1)n history nodes => lots of nodes!

- Used for the tree and to update the particle filter

- Based on [1]

- Actions: move forward, turn left, turn right, turn around

- Reward: -1 per action

- Observations: 4 wall detection sensors (with Gaussian noise), 1 landmark / goal detection sensor

- Belief: location of agent (agent's orientation is known)

- Initial belief distribution: uniform over all free cells

- Currently available maps (0 = free, 1 = wall, G = goal, L = landmark):

- 0000G

- 00000000000

11L1L1L1G11

[1] Michael Littman, Anthony Cassandra, and Leslie Kaelbling. Learning policies for partially observable environments: Scaling up. In Armand Prieditis and Stuart Russell, editors, Proceedings of the Twelfth International Conference on Machine Learning, pages 362--370, San Francisco, CA, 1995. Morgan Kaufmann.

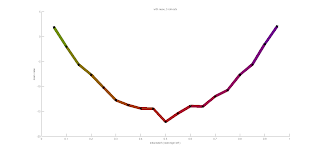

Tree Visualization