Progress

- Environment overview:

- Light dark domain with a discrete state space (grid world)

- If a move would end outside of the grid, the agent enters the grid again on the opposite side (e.g., agent leaves on the left side and enters on the right side)

- Initial belief: uniform over all possible states (except for goal state)

- Current set-up (A=agent, G=goal, L=light):

***L*

**AL*

*G*L*

***L*

***L*

- Behavior of the agent:

- No tendency to go in a particular direction in the first step

- Belief update: There seems to be something incorrect with either taking the probability density function to update the belief or with the probability density function itself (it gives probabilities > 1 which is not correct)

- Implemented a visualization tool for the tree:

Progress

- Extended the incremental regression tree learner to multidimensional, continuous observations

- Implemented a discrete state space version of the light-dark environment:

- Agent A is placed in a grid world and has to reach a goal location G

- It is very dark in this grid world but there is a light source in one column, so the idea is that the agent has to move away from its goal to localize itself

- Actions: move up, down, left, right

- Observations: location(x,y) corrupted by some zero-mean Gaussian with a standard deviation given by the following quadratic equation:

STD = sqrt [ 0.5 * (x

best - x)^2 + K ]

- Rewards: -1 for moving, +10 for reaching the goal

- Finished the background chapter

Experimental set-up

- Environment: 5x10 light-dark domain, light source in column 9:

G*******L*

********L*

******A*L*

********L*

********L*

- Number of episodes: 1,000

- Max. steps: 25

- UCT-C: 10

- Discount factor: 0.95

- Optimal is the MDP-solution based on shortest distance from the agent's starting location to the goal location

Results

|

| Normal Scaling |

|

| X-Axis scaled to Log |

Planning

- Try a larger grid world (the agent does not show the behavior of going right for the first few steps and then left)

- Continue writing

- Meeting tomorrow at 13:00

Experimental Set-up

- One action performed in the real world, from different initial beliefs

- Probability(state = tiger left) = initial belief

- Number of episodes: 5,000

- Algorithms:

- Re-use computes the belief range for each leaf of the observation tree and only splits a leaf in the observation tree if the distance between the belief ranges that the two new children would have is below some (very low) threshold

- Deletion is the standard COMCTS algorithm

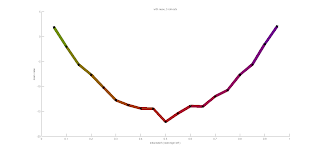

Legend

- Re-use is straight line

- Deletion is dotted line

- The color of each line segment (p1,p2) is the RGB-mixture of the average percentages of the selected actions at the points p1 and p2

- Black markers indicate the data points (i.e., the initial beliefs)

Results

Experimental Set-up

Results

Experimental Set-up

- One step in the real world from different initial beliefs

- Color is the average RGB-mixture of the percentages of the selected actions at the two end points of each line segment

- red: listen

- green: open left

- blue: open right

Results

Experimental Set-up

- Environment: Infinite Horizon Tiger Problem (stopped after 2 steps)

- Roll-outs: 500

- Standard deviation is of the Gaussian noise added to the observation signal

Results

Algorithm:

- computes for each leaf of the regression tree the corresponding range in the belief space

- re-uses leaves with a similar range

Results:

|

| First 1,000 roll-outs |